In an era where autonomous systems, robotics, smart urban planning, and environmental monitoring are no longer science fiction but everyday realities, precise sensing and intelligent interpretation of spatial data have become essential. Lidarmos is a breakthrough technology that merges the power of LiDAR (Light Detection and Ranging) with motion segmentation to provide not only a snapshot of the physical environment but also a dynamic understanding of moving objects within it. By integrating laser-scanning sensors with machine learning and real-time analysis, Lidarmos is changing how machines perceive the world — enabling safer autonomous navigation, more efficient mapping, improved environmental insight, and smarter infrastructure management. In this article, we’ll explore what Lidarmos is, how it works, where it is used, its benefits and challenges, and what the future holds.

What Is Lidarmos?

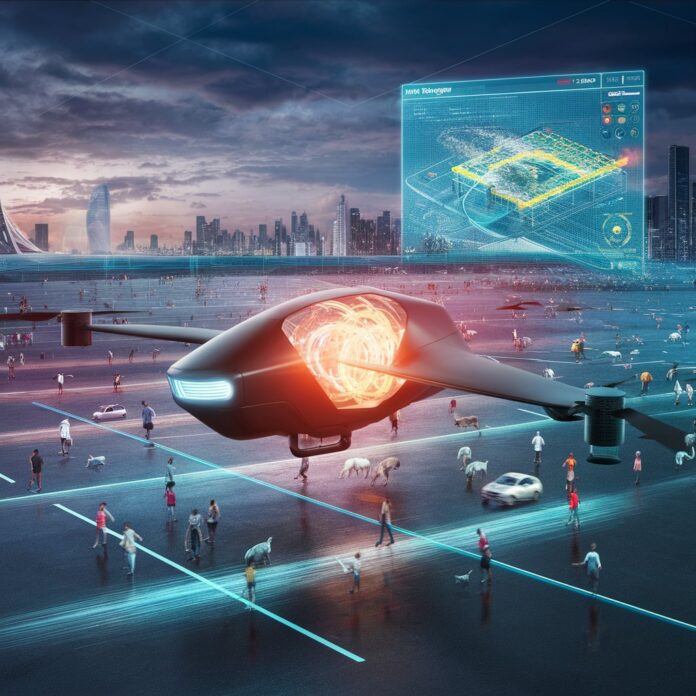

Lidarmos is essentially LiDAR with motion segmentation built in (sometimes called LiDAR-MOS). Traditional LiDAR systems fire laser pulses, measure the return time, and build detailed 3D “point clouds” that map out an environment’s geometry. While extremely valuable, these static point clouds treat all objects as non-moving unless further processed. Motion segmentation adds another layer: it analyzes changes over time to detect which objects are moving (e.g. vehicles, pedestrians, animals) and which are fixed (e.g. buildings, trees, parked cars). This gives a richer, more actionable understanding of the environment.

The term “Lidarmos” refers to the combination of LiDAR hardware + algorithms that process temporal data to distinguish motion. It is especially useful in scenarios where awareness of dynamic objects is critical — self-driving cars detecting a child crossing the road, drones navigating around moving obstacles, survey robots working in changing environments, etc.

How Lidarmos Works

Data Collection

Lidarmos begins with LiDAR sensing: laser pulses are emitted in many directions, bounce off surfaces, and return to sensors. The time delay gives distance data, building up a point cloud representing the 3D space. High pulse repetition rates, dense scanning, multiple scan angles, and sometimes mounting on mobile platforms (vehicles, drones, robots) allow rapid collection and coverage of complex environments.

Temporal / Motion Analysis

What sets Lidarmos apart is comparing successive LiDAR scans over time. By tracking changes in point clouds (points that shift, disappear, or appear), motion can be inferred. Machine learning algorithms — often deep networks — are trained to handle this, classify moving vs static objects, and sometimes predict trajectories. Filtering is used to remove noise (weather effects, sensor errors), and segmentation methods group points into objects (cars, people, etc.).

Real-Time Processing & Edge Use

Because many applications require very low latency (e.g. obstacle avoidance in autonomous vehicles), Lidarmos systems often incorporate real-time processing capabilities. Some of this may happen on edge devices (on the vehicle or robot), while heavier computation or data aggregation may occur in the cloud or centralized servers. The system must balance data volume, processing speed, and accuracy.

Key Features of Lidarmos

-

Real-Time Object Detection: Ability to detect moving things as they move, rather than after the fact.

-

Moving Object Segmentation (MOS): Separating moving from static parts of the scene, which helps in mapping, navigation, collision avoidance.

-

High Spatial Accuracy: Since LiDAR gives precise distance and structural data.

-

Robust to Lighting Conditions: LiDAR doesn’t rely on visible light, which helps in dark or low-light conditions.

-

Flexible Deployment: Can be mounted on vehicles, drones, static platforms, handheld devices.

-

Scalability: Capable of handling large point clouds; newer models aim for efficient processing even on limited hardware.

Applications of Lidarmos

-

Autonomous Vehicles: For safe navigation, detecting pedestrians, cars, bicycles, distinguishing moving vs parked objects, making split-second decisions.

-

Robotics & Drones: Path planning in dynamic environments; obstacle avoidance; environmental mapping where the scene changes.

-

Smart Cities: Traffic management, pedestrian flow analysis; installing Lidarmos units at intersections to monitor movement; optimizing infrastructure.

-

Construction & Surveying: Monitoring progress, detecting changes (movement of equipment, people) for safety, tracking site dynamics.

-

Environmental Monitoring & Agriculture: Tracking animal movement, monitoring crop growth; erosion or flood mapping, detecting changes over time.

-

Safety & Security: Perimeter monitoring, detecting intrusion, alerting when moving entities are detected in restricted zones.

Benefits of Lidarmos

Lidarmos brings many advantages over more traditional or simplistic LiDAR systems:

-

Dynamic Context Awareness — Not only knowing what is where, but also what is moving and how.

-

Increased Safety — Early detection of moving hazards, which is crucial in autonomous systems.

-

Efficiency Gains — Automation of motion detection reduces requirement for manual post-processing.

-

Better Mapping Accuracy — Removing moving objects from maps gives cleaner data for mapping and planning.

-

Versatility — Works across many lighting and environmental conditions; can be adapted to various platforms.

Challenges & Limitations

However, it’s not all perfect; there are hurdles:

-

Cost and Complexity: High-quality LiDAR sensors are expensive; motion segmentation algorithms require computational resources.

-

Data Volume & Processing Demands: Successive high-density scans generate enormous data; storing, transferring, and processing is non-trivial.

-

Environmental Interference: Rain, fog, snow, or airborne particles can interfere with returns, creating noise. Also occlusion (objects blocking the view).

-

Latency & Real-Time Constraints: For many applications (autonomous driving etc.), delays even of tenths of a second can be critical.

-

Training Data and Edge Cases: Machine learning models need good, representative training; unusual or rare scenarios (animals crossing road, debris dumps, etc.) can cause misclassification.

-

Regulation, Privacy Concerns: Mapping or scanning public areas, or using elevated sensors, may raise privacy or legal issues.

Future Trends & What to Expect

Looking forward, the evolution of Lidarmos is likely to follow these trajectories:

-

Sensor Miniaturization & Cost Reduction: Making LiDAR hardware cheaper, smaller so more use devices (drones, robots, consumer electronics).

-

More Efficient & Lightweight Algorithms: Better machine learning models that require less power, can run faster and with lower latency.

-

Integration with Other Sensors & Systems: Combining LiDAR + radar + cameras + GPS + IMUs for richer scene understanding; fusing data for more robust system.

-

Edge Computing & On-Device Intelligence: More processing done locally to reduce delay; less dependence on remote servers.

-

Advances in Motion Prediction: Not just detecting motion, but predicting trajectories, behavior of moving objects.

-

Wider Adoption in Infrastructure & Urban Design: Sensors embedded in cities; routine use in managing traffic, safety, environment.

-

Regulations & Ethical Use: Standards for privacy, data security, ethics of scanning public spaces.

Conclusion

Lidarmos represents a significant step forward in spatial sensing technology. By combining LiDAR’s precise 3D mapping with motion segmentation, it doesn’t simply show where things are — it shows what is moving, how, and enables systems to respond intelligently. While there are real challenges — hardware costs, data handling, environmental interference, latency, and privacy — the benefits for safety, efficiency, insight, and automation are compelling. As the sensors get smaller, algorithms smarter, and deployment more widespread, Lidarmos will likely become a foundational technology in autonomous vehicles, robotics, smart cities, environmental monitoring, and many other fields. For anyone interested in or working with spatial sensing, motion detection, or autonomy, understanding and leveraging Lidarmos is increasingly essential.

Frequently Asked Questions (FAQ)

-

What does the name “Lidarmos” stand for?

Lidarmos is shorthand often used for LiDAR + Motion Segmentation (LiDAR-MOS). It indicates a system that not only collects spatial data via LiDAR, but also analyzes motion (objects in motion) within the scanned environment. -

How is Lidarmos different from regular LiDAR systems?

Regular LiDAR systems create static 3D point clouds that show where objects are, but they don’t inherently recognize whether objects are moving or not. Lidarmos adds temporal/motion segmentation to recognize motion directly, which is crucial for dynamic interaction and real-time responsive tasks like obstacle avoidance. -

What types of applications benefit most from Lidarmos?

Applications that require understanding dynamic scenes: autonomous vehicles, robotics in changing environments, smart city traffic management, security systems, environmental monitoring where change over time matters (e.g. flood detection, forest growth), construction monitoring, etc. -

What are the key limitations or challenges when implementing Lidarmos?

Some major challenges: cost of sensors, handling large volumes of high-density data, ensuring real-time performance (low latency), dealing with environmental noise (weather, occlusions), the need for robust training data (especially with rare or unusual motion scenarios), and legal/privacy issues. -

Can Lidarmos work in harsh or challenging environments?

Yes, but with caveats. LiDAR can suffer in heavy rain, fog, snow or when particles are airborne; returns may be noisy or lost. Also, occlusions or reflective surfaces can hamper accuracy. Robust motion segmentation algorithms and filtering help, and sensor calibration is vital. Using multiple sensors or complementary modalities (radar, camera) aids robustness. -

What is the future of Lidarmos technology?

The future likely includes smaller, cheaper LiDAR units; smarter, more efficient AI for motion segmentation; better integration with edge devices; increased use in infrastructure and public environments; improved prediction of motion; ethical and legal frameworks for data use; and wider adoption across industries.